Are Our Kids Test Subjects In The Effects Of This Radical Technology?

Please Follow us on Gab, Minds, Telegram, Rumble, GETTR, Truth Social, Twitter

Imagine this. You are a teacher. Every day you walk into your classroom as your students enter. You close the door, take roll, and start class with a carefully planned lesson to help your students learn the objective of the day. As you look out onto your classroom expecting to see their faces you see...

...the top of the head of almost every single student as they look at their laptop, iPad, or cell phone. You can attempt to get their attention, but each kid in the class is either surfing the web, playing a game, or texting someone.

Welcome to the new world of education versus technology.

I taught school for thirty years at grade levels from 1st grade to Seniors in high school. During most of those years, technology played at least a small part in education. It started with computer labs in schools and accelerated to the point where each student had either a laptop or iPad/Chromebook in his/her hands in every class, every day.

Don't forget to add smart phones to the equation.

I didn't mind teaching with technology. It made it easier for me to develop lessons, communicate with parents and fellow educators, and track grades and student progress. I could effectively use video clips in my classroom presentation. I could actively engage students in a lesson through technology and make even the oldest of literature relevant to today's events via side-by-side comparisons electronically. I could even check student writing for plagiarism with a click of a button.

But I also had to monitor student usage of technology in the classroom constantly.

It got so bad in classrooms that teachers were given a program to monitor what students were doing on their computers/iPads. A teacher had a computer screen filled with the names of his/her students and a picture of the screen of that student's device. A teacher could turn off a student's device or even take a screen shot of what the student was doing to send home to parents or give to administrators. It was effective, when it worked. Like many things, students found a way to get around the program and any blocks put on certain websites by the county.

Our county adopted a program where every student had a laptop in their hands in 2005. The program was supposed to increase test scores and academic achievement, but it hasn't worked. Now, in 2024, students have had access to technology for 19 years and the county's test scores are abysmal. In Reading/Language Arts 47.3% of students scored proficient. In Math, it is 18.3%.

Quite simply, technology is more of a distraction for some students than an asset. Studies have shown that it not only is a distraction, but a detriment to a student's intellectual development:

Too Much Screen Time May Affect Children's Brain Development, Early Findings Show

Teachers also complain that student behavior is adversely affected by their constant use of technology and excessive screen time. This can stem from a lack of being able to relate to others in person as well as not being able to disengage from their "screen addiction" in class. This report shows how much screen time increased for our young people from 2015 to 2021:

8-18-census-integrated-report-final-web_0.pdf

However, there are other problems with technology usage in student classrooms. Antero Garcia, associate professor in the Stanford Graduate School of Education, writes about how technology platforms can actually limit student exposure to a variety of sources of information and opinions:

These platforms restrict our online and offline lives to a relatively small number of companies and spaces – we communicate with a finite set of tools and consume a set of media that is often algorithmically suggested. This centralization of internet – a trend decades in the making – makes me very uneasy.1

He explores this point in more depth in this article from Kappan Online:

Digital platforms aren’t mere tools — they’re complex environments - Kappan Online

He writes:

When teachers think of such platforms as tools, rather than digital spaces, they tend to lose sight of important questions about the kinds of educational environments they want to provide and the kinds of interactions they want to create among students. For example, should we be worried that so much classroom instruction has come to rely on third-party, commercial platforms? (This trend predates COVID-19, but the pandemic has greatly accelerated it.) Do these platforms allow for the kinds of conversations and activities we want our students to have? And to what extent has the scramble for “tools” to facilitate activities in virtual settings transformed our learning spaces for the longer term?

His argument regarding the platforms used in classrooms is pivotal, as many of these platforms have a hidden agenda and often reinforce ideology which students are subliminally exposed to and their families are opposed to. Some expose students to real danger. For example, a third-party platform being used in a California school district had links pointing to a social media site where adolescents could communicate with random adults, some portraying themselves as teens:

School Crisis Line Under Fire for Online Predator Risk - California Family Council

Below is the link. Notice that the site will disappear from someone's "cookies" or browsing history as soon as they leave it. That way a parent will never know where the child has been electronically. Scroll all the way down to the bottom, and you will find "The Trevor Project" which is mentioned in the article:

Counseling Resources / Mental Health Resource List

In Frederick County, Maryland, public school students now have an app to help them with their "mental health." Helping kids with their mental health, on its own, is fine. What isn't fine is that students have a gauge (score) of their mental health decided by an algorithm of the app on a weekly basis. It's also promoting an idea that everyone has a mental health problem or at least encouraging that. And, while parents can see what is on the app, they can't be with their kid 24/7 while he or she is using it.

So, while technology in the classroom was sold to the public as a way to increase student achievement by providing access to educational sites and services, it has morphed into something different altogether. And it has cost billions of dollars nationwide for contracts with hardware providers like Apple, Dell, Microsoft etc. as well as third party educational app developers.

While basic technology in the classroom is a huge problem, there's something much more frightening around the bend. Artificial Intelligence.

When asked about Artificial Intelligence, Elon Musk, said:

“AI is more dangerous than, say, mismanaged aircraft design or production maintenance or bad car production, in the sense that it is, it has the potential — however small one may regard that probability, but it is non-trivial — it has the potential of civilization destruction,” Musk said in his interview with Tucker Carlson.

Musk has repeatedly warned recently of the dangers of AI, amid a proliferation of AI products for general consumer use, including from tech giants like Google and Microsoft. Musk last month also joined a group of other tech leaders in signing an open letter calling for a six month pause in the “out of control” race for AI development.2

But is AI actually being used in our schools? From Artificial Intelligence in Schools : Implementing AI in Education - ColorWhistle:

Its presence in classrooms and schools worldwide is becoming increasingly prevalent, with 92% of institutions reported to be regularly using AI technology according to a study by IDC. (International Data Corporation)

From another source:

Artificial intelligence in education may be applied in the classroom with students of any age, making it an intriguing and rapidly expanding technology. (AI: Education and New Learning Possibilities for GenZ)

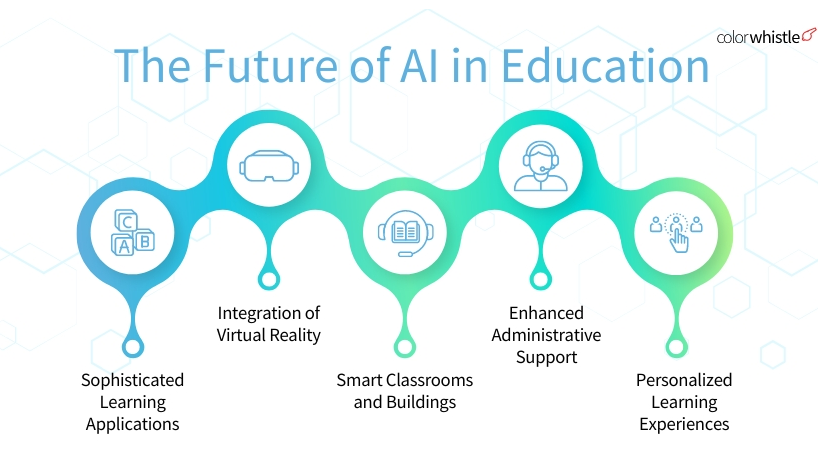

This chart from colorwhistle shows ways AI will be used:

This may not look scary to some from the outside, as organizations like "color whistle" describe what they see as "amazing" artificial intelligence scenarios:

Imagine a scenario where a biology class incorporates both VR and AI technologies. In this setup, students wear VR headsets that transport them to a virtual laboratory where they can conduct experiments on virtual specimens. The AI component tracks each student’s progress, analyzes their interactions within the virtual lab, and provides personalized feedback based on their performance.

This integration of VR and AI technologies in a biology class not only enhances student engagement and understanding but also enables educators to track student progress more effectively and provide targeted support where needed.

It sounds too good to be true, doesn't it? That's because it isn't the full truth.

Musk's warning is warranted, especially when it comes to AI in our schools.

There are some, including UNESCO (United Nations Educational, Scientific and Cultural Organization) that are fully on board with the implementation of AI in education. This is the document they published on the topic in 2022:

One interesting bit of information is that AI in education was worth $6 billion dollars this past year. UNESCO discusses how AI will promote "social good" and the "achievement of the Sustainable Development Goals." If you don't know what those goals are they can be found at the unesco.org website and they include a wonderful utopian view of solving world problems with solutions mainly created by the Chinese and pointed towards a "one world" governance. In fact, the conference that developed the document above, was held in Beijing. Nothing to see here as the most oppressive regime in the world hosts a conference to develop guidelines for AI in education.

The rest of the document includes information that supports using AI in education to provide access to those countries which don't have technological advances and how UNESCO promises it won't take the place of human teachers.

Strangely, the document includes this statement regarding the implementation of AI:

Inescapably, the application of AI in educational contexts raises profound questions – for

example about what should be taught and how, the evolving

role of teachers, and AI’s social and ethical implications.

There are also numerous challenges, including issues such

as educational equity and access. There is also an emerging

consensus that the very foundations of teaching and learning

may be reshaped by the deployment of AI in education.

All of these issues are further complicated by the massive shift

to online learning due to the COVID-19 school closures.

Let's all remember where Covid 19 was developed and released to the world.

In their article about AI in the public schools, the Brookings Institute takes the position that:

Should schools ban or integrate generative AI in the classroom?

The article also points out that several city and state school systems have banned ChatGPT an AI bot that became a favorite of students at all educational levels to create content that was supposed to be a student written assignment. Computerworld explores that topic:

Schools look to ban ChatGPT, students use it anyway – Computerworld

There are so many problems with AI use in schools; abuse, built in biases, ethics, and plagiarism. Common Sense Education points out in the article below that school districts get in front of those problems with clear usage policies, consequences for misuse, and effective monitoring of student usage of AI. Students need to know what it means to use source information with consent, to cite information used, etc.

ChatGPT and Beyond: How to Handle AI in Schools | Common Sense Education

Some colleges and universities are embracing the use of AI in student work by allowing it as long as students disclose its use. They also back up that permission with requiring students to ultimately pass a course by insisting students use paper and pencil exams and/or oral answers to involved questions. Of course, these are colleges and universities. Hard to apply those actions to public K-12 schools.

Based on recent events, plagiarism or falsely claiming content as one's own might be the least of schools' problems. The following two articles are about how AI chatbots encouraged adolescents to violence and, in one case, suicide.

AI chatbot encouraged teen to kill his parents, lawsuit claims

This mom believes Character.Ai is responsible for her son’s suicide | CNN Business

In both of these cases, the teens were using an AI bot that could mimic or portray themselves as the character the child chose. The child, believing this was his "friend," told the AI all of his thoughts. Based on those thoughts the bot then went to give these children horrible, deadly instructions.

The problem is that Artificial Intelligence is not a stagnant technology. It grows and adapts in brain power with every use, every "interaction" with humans. It, when coupled with a supercomputer, can complete tasks with lightning speed that humans cannot ever replicate or imagine. As Musk indicated, it has the potential of civilization destruction, even if it is one child at a time.

I am not a Luddite (a person who is opposed or resistant to new technologies). I think some technology is great for teaching, especially for children with learning disabilities. I am, however, a person who thinks we must think long and hard about the effects of artificial intelligence and constant use of technology on our children in general.

What happens to our kids if the adults in their lives abdicate responsibility for their child's interactions, learning, development, ethics, morals and beliefs to an AI generated personality that will shape and possibly control them?

We are already seeing that happen with social media and its influencers, likes, followers, etc. AI will take that influence to a completely different level when it is employed in the classroom where students are captive recipients 6 hours a day, 5 days a week. Teachers, if needed at all, will become classroom monitors, employed to make sure that students are physically safe and orderly. This article from Forbes Magazine gives more detail on this frightening trend:

AI Agents Will Shape Every Aspect Of Education In 2025

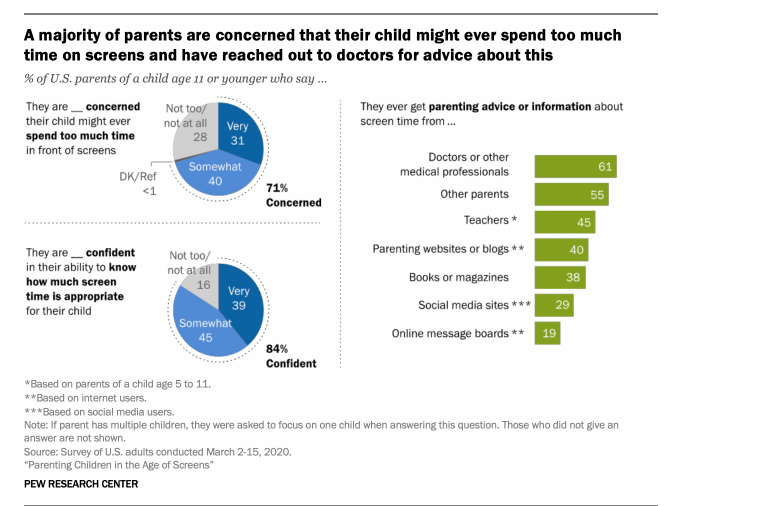

There is hope. In this article from Pew Research Center, statistics show that parents are resisting the constant use of technology by their children.

Parenting Kids in the Age of Screens, Social Media and Digital Devices | Pew Research Center

This generation of young parents has seen the detrimental effects of too much technology firsthand and are fighting back. That's good news. Tech giants like Musk and other often state that they won't allow their children to be raised by technology.

They see the need for children to learn math facts and principles. They see the need for them to learn spelling. They see the need to learn grammar. They want their children to learn accurate history and the love of reading. They want them to conduct real science experiments, in person. They also want them to be physically active.

Most important, they want their children to be able to have real, live, human childhood friends. Not artificial, computer-generated "friend-bots."

We are at a turning point in the use of technology with our children where we can either be the ones who raise them, or we can cede that responsibility to an electronic, soulless algorithm developed in a supercomputer. Let's hope we heed Musk's warning, control or even eliminate the use of AI, and take that sacred responsibility ourselves.

Sources:

1. Technology might be making education worse | Stanford Report

2. Elon Musk warns AI could cause ‘civilization destruction’ even as he invests in it | CNN Business

More articles on technology and schools:

https://hubvela.com/hub/technology/positive-negative-impacts/students

Is technology good or bad for learning?

Students Are Behaving Badly in Class. Excessive Screen Time Might Be to Blame